NLU, a subset of natural language processing (NLP) and conversational AI, helps conversational AI applications to determine the purpose of the user and direct them to the relevant solutions. Adjudication rules refer to rules that are applied during evaluation to allow non-exact matches to count as accurate predictions. In some cases, there may be entities or intents that you want to have considered as equivalent, such that either counts as correct for an accuracy calculation. For example, some applications may not care whether an utterance is classified as a custom OUT_OF_DOMAIN intent or the built-in NO_MATCH intent.

MTT supports adding adjudication rules for considering different intents or entities to be equivalent, as well as for ignoring entities entirely. Note that if the validation and test sets are drawn from the same distribution as the training data, then we expect some overlap between these sets (that is, some utterances will be found in multiple sets). The most obvious alternatives to uniform random sampling involve giving the tail of the distribution more weight in the training data. For example, selecting training data randomly from the list of unique usage data utterances will result in training data where commonly occurring usage data utterances are significantly underrepresented.

Critical features of AI implementation in business

NLU helps to improve the quality of clinical care by improving decision support systems and the measurement of patient outcomes. Currently, the quality of NLU in some non-English languages is lower due to less commercial potential of the languages. NLU, the technology behind intent recognition, enables companies to build efficient chatbots.

Once satisfied with the model’s performance on the validation set, the final test is done using the test set. This set of unseen data helps gauge the model’s performance and its ability to generalize to new, unseen data. Denys spends his days trying to understand how machine learning will impact our daily lives—whether it’s building new models or diving into the latest generative AI tech. When he’s not leading courses on LLMs or expanding Voiceflow’s data science and ML capabilities, you can find him enjoying the outdoors on bike or on foot. In the data science world, Natural Language Understanding (NLU) is an area focused on communicating meaning between humans and computers.

Tuning Your NLU Model

Natural language processing is a category of machine learning that analyzes freeform text and turns it into structured data. Natural language understanding is a subset of NLP that classifies the intent, or meaning, of text based on the context and content of the message. The difference between NLP and NLU is that natural language understanding goes beyond converting text to its semantic parts and interprets the significance of what the user has said. For reasons described below, artificial training data is a poor substitute for training data selected from production usage data. In short, prior to collecting usage data, it is simply impossible to know what the distribution of that usage data will be.

Automate data capture to improve lead qualification, support escalations, and find new business opportunities. For example, ask customers questions and capture their answers using Access Service Requests (ASRs) to fill out forms and qualify leads. With the availability of APIs like Twilio Autopilot, NLU is becoming more widely used for customer communication.

Define intents and entities that are semantically distinct

You need a wide range of training utterances, but those utterances must all be realistic. If you can’t think of another realistic way to phrase a particular intent or entity, but you need to add additional training data, then repeat a phrasing that you have already nlu models used. Having said that, in some cases you can be confident that certain intents and entities will be more frequent. For example, in a coffee-ordering NLU model, users will certainly ask to order a drink much more frequently than they will ask to change their order.

- Some frameworks allow you to train an NLU from your local computer like Rasa or Hugging Face transformer models.

- This set of unseen data helps gauge the model’s performance and its ability to generalize to new, unseen data.

- Hence the breadth and depth of “understanding” aimed at by a system determine both the complexity of the system (and the implied challenges) and the types of applications it can deal with.

- A dynamic list entity is used when the list of options is only known once loaded at runtime, for example a list of the user’s local contacts.

- After the data collection process, the information needs to be filtered and prepared.

As a result of developing countless chatbots for various sectors, Haptik has excellent NLU skills. Haptik already has a sizable, high quality training data set (its bots have had more than 4 billion chats as of today), which helps chatbots grasp industry-specific language. Businesses use Autopilot to build conversational applications such as messaging bots, interactive voice response (phone IVRs), and voice assistants.

The Impact of NLU in Customer Experience

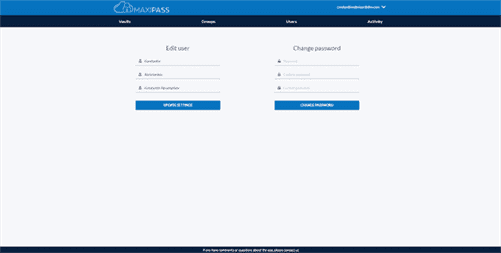

The tool doesn’t call your endpoint, so you don’t need to develop the service for your skill to test your model. If you’re experiencing issues with this product, go to the IBM Cloud Support Center and navigate to creating a case. Use the All products option to search for this product to continue creating the case or to find more information about getting support. Third party and community supported products might direct you to a support process outside of IBM Cloud.

Entity roles and groups make it possible to distinguish whether a city is the origin or destination, or whether an account is savings or checking. The main benefit of having this information in a data frame is that you can easily interact with other tools in the Python ecosystem. You can zoom in on a particular intent and you can make whatever charts you like. If you’re really interested and want to go further, you could even retrieve the machine learning features that were generated. Therefore, their predicting abilities improve as they are exposed to more data. ATNs and their more general format called “generalized ATNs” continued to be used for a number of years.

Support multiple intents and hierarchical entities

When building conversational assistants, we want to create natural experiences for the user, assisting them without the interaction feeling too clunky or forced. To create this experience, we typically power a conversational assistant using an NLU. The Rasa stack also connects with Git for version control.Treat your training data like code and maintain a record of every update. Easily roll back changes and implement review and testing workflows, for predictable, stable updates to your chatbot or voice assistant. Rasa Open Source is the most flexible and transparent solution for conversational AI—and open source means you have complete control over building an NLP chatbot that really helps your users. You can clone the repository to follow along, but you can also run the steps shown here on your own project.

We recommend that you configure these options only if you are an advanced TensorFlow user and understand the

implementation of the machine learning components in your pipeline. These options affect how operations are carried

out under the hood in Tensorflow. An alternative to ConveRTFeaturizer is the LanguageModelFeaturizer which uses pre-trained language

models such as BERT, GPT-2, etc. to extract similar contextual vector representations for the complete sentence. The arrows

in the image show the call order and visualize the path of the passed

context.

Run data collections rather than rely on a single NLU developer

The order can consist of one of a set of different menu items, and some of the items can come in different sizes. https://www.globalcloudteam.com/ We also include a section of frequently asked questions (FAQ) that are not addressed elsewhere in the document.